Experiment plan and results template

By optimizely.

Streamline your process and improve your business

KEY FEATURES

Your team is full of ideas that they’d like to test out to learn about your customers and advance your business, and you need a process for collecting those suggestions. Optimizely, the leading experimentation platform, created this super helpful template your team can use to submit experiment ideas (with all of the relevant details). Using this template, you’ll create a single source of truth about your experiments and maintain a catalog of previous experiment data that you can use to fuel learning and shape future tests.

Why Confluence?

How to use the experiment plan and results template, step 1. cover the basics.

The top table of the template has space to outline the basics – including the name of the experiment, the experiment owner, reviewers, approvers, and the status. Make sure to @ mention team members so they’re notified about their involvement, and include your Optimizely link for easy reference.

Step 2. Set a plan for your experiment

If you remember anything about the scientific method from school, then you know that there are quite a few steps that happen before the actual experiment. Use the Experimentation planning section of the template to lay the foundation for success. Provide a high-level overview of the experiment, establish your hypothesis, define your metrics and targets, establish your variations, and include any baseline data or notes you might need to refer to. As eager as you might be to jump right in with the testing, investing time in planning will set you up for a lot of learning.

Step 3. Outline your results

You ran the experiment and the results are in. Document them in the Results section of the template. Jot down your experiment start and end date, the link to your Optimizely results, and a short summary of the metrics that you monitored. Make sure you also assign a clear conclusion (such as “inconclusive” or “hypothesis proved”) here, so everybody can get the results of that experiment at a glance without wading through all of the details.

Step 4. Draw conclusions

Your results shouldn’t be the end of the road. You want to learn from each and every experiment (whether your hypothesis was correct or not), which means you need to identify some firm outcomes. In the Conclusions section of the template, highlight your primary goals and other metrics for easy reference. Then, write down key takeaways, questions, and observations and outline any next steps that your team should take. This means you’ll go beyond learning and actually use those findings to improve your business.

Confluence is a connected workspace that empowers teams to create, organize, and share knowledge. Easily create content, like dynamic pages, collaborative whiteboards, structured databases, and engaging videos. Pull in all your work to reduce context-switching and turn scattered information into a single source of truth.

Flexible and extensible, Confluence is used by all types of teams, from marketing to technical teams and program management to sales teams. With 100+ free templates, Confluence makes it easy for teams to get started quickly and efficiently.

Related Templates

Devops runbook.

Prepare your operations team to quickly respond to system alerts and outages.

AWS architecture diagram

Visualize your infrastructure to better identify weaknesses and pinpoint places for refinement.

Design your ideal workweek

Provide step-by-step guidance for completing a task.

Key features

Your comprehensive guide to creating an experiment plan.

Introduction

Experimentation is a highly cross-functional endeavor and like any machinery having a lot of moving parts, there are a lot of opportunities for failure. Shortcuts taken in experiment planning and execution can sow doubt in the reliability of your experiment results or even double your runtime due to missteps in logging, configuration, or mismatched analytical methods. Sometimes we have to slow down to speed up.

Writing and sharing experiment plans help us to slow our thinking down just enough to clarify our objectives and steps required for execution. In this guide, I will share what I've seen work in organizations running 100+ experiments a month. It is a piece of the process that was lacking in organizations I've seen struggle to get their experimentation programs off the ground.

What Is an Experiment Plan

Just as we would write specifications for a design or engineering team, an experiment plan’s primary objective is to communicate the specifications required for executing an experiment. It outlines the motivation and context for our hypothesis, illustrates the different user experiences under the control and treatment, who we intend to include in our test, when we will run the test and for how long, amongst other details.

An experiment plan is more than documentation; it's an essential blueprint that guides teams in efficient execution and collaboration. It also serves as a contract and standardized process that safeguards experiment rigor and trustworthiness by "putting to paper" what we are committing to do. Some experiment plans include a checklist for quality assurance, mitigating the risk of compromised experiment data and time lost to rerunning misconfigured tests.

A good experiment plan is peer-reviewed and shared with stakeholders, facilitating a culture of transparency, partnership, and rigor. And finally, an experiment plan serves as an artifact to remind us of what was tested and why, creating institutional memory.

What Are the Essentials of an Effective Experiment Plan?

There are core components that are key to launching a successful experiment that you'll want to document. Your teams might find value in adding additional information however, use caution to avoid process bloat; you want to minimize the risk of introducing too much friction. A rule of thumb for good documentation is that if it were a sheet of paper that a random person on the street picked up, they could walk away with a solid understanding of the problem, solution, and desired outcomes.

Here, we discuss the essential components of an experiment plan in-depth with examples of how the omission of each can result in outcomes varying from the not-so-desirable to the catastrophic.

Core Components of an Experiment Plan At a Glance

The problem statement

The hypothesis

The User experience

Who is included in the experiment (and where does bucketing happen)?

Sampling strategy

Primary, Secondary, Guardrail Metrics

Method of analysis

Baseline measure & variance

Statistical design

Decision matrix

Deep Dive Into the Components of an Experiment Plan

The problem statement.

The problem statement concisely tells your collaborators and stakeholders what it is you are trying to solve for, in context. It describes the business problem and relevant background, proposed solution(s), and outcomes in just a few sentences. The context could be drawn from an analysis that demonstrates an area for improvement, insights gleaned from a previous experiment, or it could be motivated by user research or feedback. It is important to provide links to the motivating context for anyone who will need to understand the etymology of your product, detailing what has changed and why.

Without a clear problem statement that connects the motivation for your feature, the proposed solution, and hypothesized outcomes as a result of implementing this solution, it will be difficult to obtain buy-in from your stakeholders and leadership.

Note: If you have a business requirements doc (BRD) or a product requirements doc (PRD), this is a good place to link to it. Keep in mind that these documents serve different functions, and while there is some overlap, a data scientist does not want to parse context from engineering specifications any more than a developer wants to parse context from a statistical document. Neither a BRD nor PRD is sufficient on its own for the purposes of designing an experiment.

The Hypothesis

The hypothesis is intrinsically a statistical statement, however, don't let that daunt you if you're not a statistician. It should easily fall right out of a well-written problem statement. It is a more distilled problem statement, stripped of the business context, and generally takes the form of:

By introducing X we expect to observe an INCREASE/DECREASE in Y ,

where X is the change you are introducing and Y is the primary metric that ties back to the business objective. Y should hypothetically demonstrate an improvement caused by the introduction of the change.

Your hypothesis is the critical formative element for the statistical design required to create a valid experiment that measures what you intend to measure. It also plays a crucial role in metric selection and decision frameworks. Absent a good hypothesis, your objectives will be murky and success undefined casting doubt on the experiment as a whole.

Note: The astute reader will note that the structure of the hypothesis above omits the null hypothesis and implies a one-sided test of significance, whereas the more common method of experimentation is a two-sided test designed to detect any change, positive or negative. I think this departure from a strict statistical definition is okay because 1. We include a distinct methods section, 2. The hypothesis is often written from a product point of view and the nuts and bolts of hypothesis testing are non-intuitive, and 3. The semantics are understood in the context of the desired direction of change. You might take a different approach that best fits the culture within your company. My sense is that writing a hypothesis in this way enables the democratization of experimentation.

The User Experience

Here, you want to provide screenshots, wireframes, or in the case where the difference is algorithmic or otherwise not obviously visual, a description of the experience for control and treatment.

We want to make sure that the specific change we're testing is clearly communicated to stakeholders, and that a record is preserved for future reference. This can also help with any necessary debugging of the experiment implementation. test, and potentially debugging the experiment implementation.

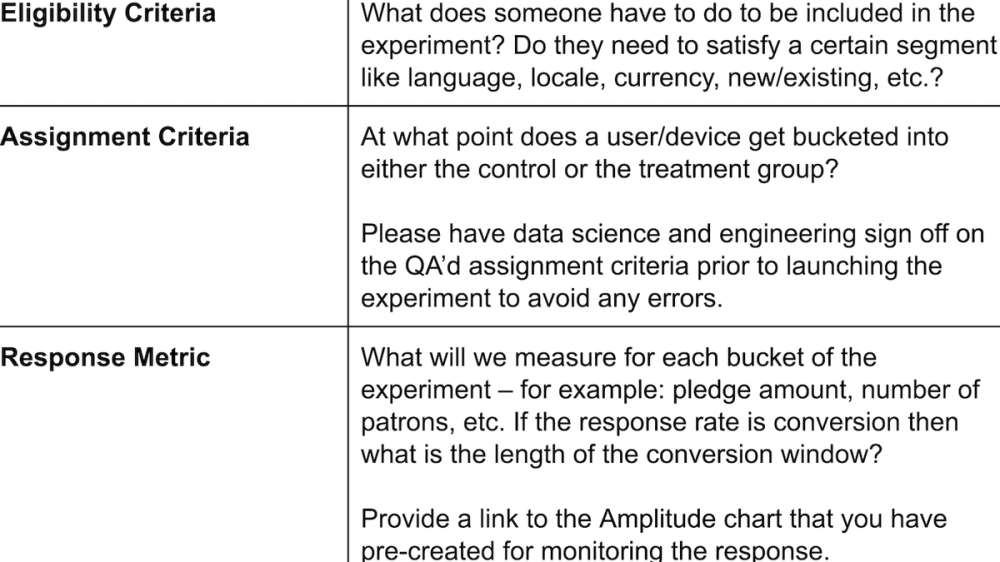

Who Is Included in the Experiment and Where Is the Experiment Triggered?

This description can vary from trivial to nuanced. A trivial example might be "all users". But for an experiment that is deployed on the checkout page, you would want to trigger the experiment allocation on the checkout page, so then the population you are sampling from would be "all users entering checkout". This might have further conditions if your population is restricted to a platform, like iOS, and a certain region. For example, "all users in NYC entering checkout on iOS".

Being explicit here helps us QA where the experiment allocation gets triggered and ensure we're filtering on the relevant dimensions. It also calls out any conditions on the population we would want to consider when pulling numbers for the power calculation.

The downside of missing this ranges from a misconfigured experiment and subsequently longer time to decision cycles to an incorrect estimation of the number of samples required, which could lead to an underpowered experiment and an inconclusive result, both of which are costly and frustrating.

Sampling Strategy

This is another topic where the design could be trivial or complex. In most cases, you will be sampling from "100% of the population, 50% Control, 50% Treatment". If you are lucky enough to have far more users than those required to run the experiment, you might have a situation where your sampling strategy could look like "20% of the population, 50% Control, 50% Treatment".

There are more advanced sampling strategies in switchback experiments, synthetic controls, or cases where a test is deployed in limited geo-locations. These are generally limited to special cases.

This information is useful for QAing the test configuration, inputs to the statistical design and power calculation, and in special cases, discussing with stakeholders any risks involved with a full 50/50 rollout. Some systems might not be able to handle the traffic given a new feature (think infrastructure or customer service teams).

While I can't tell you which specific metrics you should be measuring, I want to share how to think about which metric(s) belong in primary, secondary, and guardrail metric categories.

I like to anchor my thinking in, "Would a deterioration in this metric prevent me from rolling out this feature?" If the answer is yes, it is what I call a decision making metric and is a good candidate as a primary metric.

Secondary metrics are selected in support of the primary objective. They help us to tell a more robust story. I like to call these "hypothesis-generating metrics" because when they surprise us, a deep dive can help us to develop subsequent hypotheses. But even when we find these metrics to be statistically significant, remember that they may be underpowered and/or not corrected for in the overall error rate and shouldn't contribute to go/no-go calls.

Guardrail metrics are metrics that you don't expect the experiment to move but when moved, could be indicative of unintended second order+ effects or bugs in the code. Examples might be unsubscribes or degradation in page load times. It is imperative that they are sensitive enough to be moved - metrics like returns or retention are too far downstream to detect an alarming effect in near-realtime. or the timeframe of the experiment duration.

To protect the integrity of your experiment and guard against cherry-picking, it is important to establish these metrics from the outset.

Method of Analysis

This is where you'll specify the statistical methods you plan to employ for your experiment. This might just be a two-sample t-test or more advanced methods in statistics or causal inference like a difference-in-differences test, group sequential methods, or CUPED++.

When in doubt, consult your friendly experimentation specialist.

Baseline Measure & Variance

Often, you already have the baseline measure of the primary metric from when you generated or prioritized your hypothesis. In case you don't, you'll want to first collect these values before you can proceed with the next sections in the plan. (If you require analytics support in accessing this data, get your request in as early as possible to avoid bottlenecks and launch delays.)

When establishing the baseline measure (e.g. your average revenue per user, before the experiment), there are a couple of things to keep in mind.

First, if accessing from a dashboard, be aware that your business metric and experiment metric might not share the same definition. This is very important because a small deviation from the true baseline can cause a large deviation in your expected runtime. Make sure that the baseline values you obtain reflect the baseline experience you will measure. For example, your experiment population might be limited to a region, while the reference dashboard is aggregated across all users. Another way this shows up is that sometimes experiments use metrics like revenue per session where a session can take on any number of custom definitions - this is likely not reflected in a business analytics dashboard causing yet another way your metrics might differ.

Try to obtain a robust estimate. Every business has seasonal variation, so you might think that an estimate from a similar time period in the previous year is best, but also consider the changes in your user experience that have been deployed in the past year. Those two values might not be as comparable as you think. On the other hand, two data points close in proximity are more likely to be similar than two points more distant. For example, today's local temperature is likely more similar to yesterday's temperature than the temperature 3 months ago. So you might consider taking an aggregate measure looking back over a time period that is: 1. Near the date you plan to execute your experiment and 2. With a lookback window roughly equivalent to your expected experiment runtime. Don't forget to be aware of any anomalous data from holidays, rare events, and data pipeline issues or changes.

Minimum Detectable Effect (MDE)

MDE is a tough input for beginners to wrap their heads around. It is essentially a shot in the dark guess around the relative effect size you expect from your experiment, but of course you don't yet know what the relative effect size is!

The thing you need to know before starting is this: let's say you choose your minimum detectable effect to be a 2% lift - if the true effect is larger, you are likely to have the resolution - by way of number of samples collected - to detect it! But, if instead your true effect is smaller than 2%, you won't have planned a sufficient sample size to detect the signal from the noise.

I like to approach this iteratively. So when you're taking your "shot in the dark", choose a value on the lower bound of what you think is a meaningful and possible lift for this test. Run your power calculation and see, based on average traffic values, how long that would take to test. If it is prohibitively long, you can adjust your MDE upwards, but within the range of plausible lifts. However, remember if the true effect is smaller, you might end up with an inconclusive test.

If you find that in order to run a test in a reasonable timeframe the MDE gets pushed outside the range of plausible values, you might consider alternative statistical methods to assist in reducing the variance. Another approach is to perform a "Do No Harm" test (also referred to as a non-inferiority test).

How long is a reasonable time frame? This will vary quite a bit based on the size and maturity of your company and how much uncertainty is tolerable for your organization. I recommend a minimum of two-week runtimes (to capture two full weekly cycles). But I have also seen cases where experiments just take longer. Unless you are running a longitudinal study, I don't recommend testing longer than eight to twelve weeks - beyond that, externalities are more likely to creep into your controlled experiment and it becomes difficult to attribute the observed effect to the treatment alone. You're better off revising your methodology or taking a bigger swing at a more meaningful (and sizable) change.

Power and Significance Levels

Power and significance refer to the two most important parameters in choosing the statistical guarantees your test will offer (assuming you're using a frequentist test ).

The widely adopted rules of thumb here are to set your power at 80% (i.e., 100% - power level = 20% is the false negative rate) and your significance level at 5%. Your org might use different heuristics, but in most cases, there is no need to adjust these.

Again, when in doubt, collaborate with a data scientist or your experimentation center of excellence.

Power Calculation (or "Sample Size Calculation")

In this section, you will use the inputs from your baseline measure, variance, MDE, and power and significance levels. The result will be the total number of samples required per variant required to run your experiment. It is also helpful to your reviewers to state how the calculation was arrived at (link to an online calculator, the package/method used, or formula used).

The power calculation is essential to committing to running your experiment to the required number of samples and guards against early stopping and underpowered experiments. It's a handshake contract that keeps all parties committed to conducting a trustworthy experiment.

Estimated Runtime

Add up the number of samples per variant, divide by the total estimated traffic, and round up to the nearest value or 14 days (whichever is larger) to estimate the number of days your test will take.

A word of caution, the actual volume of traffic might differ from your estimate. If you see this happening, run your test to sample size and not number of days. It's the number of samples that ensures that your specified false positive and false negative rates.

Decision Matrix

Once you have your metrics and experiment design in place, you'll want to enumerate over the decision metrics and possible outcomes (positive, negative, or inconclusive) and specify the decision you will make in each scenario.

It is imperative that this is done before data collection begins. First, it keeps us honest, guarding against cherry picking and personal bias, unconscious or otherwise. We are all imperfect humans with attachments and hunches. The other reason is that it expedites decision-making post experiment. Your HiPPOs (highest paid person's opinion) will appear once an experiment has concluded. Even worse when there are multiple stakeholders involved, each with different metrics they are responsible for. In my own experience, I have seen leaders talk in circles for weeks, delaying a final rollout decision. I have found it is a lot easier to bring your stakeholders and relevant leadership along on the experiment journey and commit to a decision given specific criteria before data has been collected than it is to try to align various stakeholders with different concerns once the data is available for results to be sliced and diced.

Socializing experiment plans amongst your stakeholders and peers can go a long way toward building trust in your product development and testing programs. With thoughtful planning and transparent communication, we not only enhance the reliability of our experiments but also reduce errors in design and configuration, in turn, reduce the time to ship features that make an impact. In the process, we create a record of what we have done and why. And having a plan that incorporates peer review and feedback only increases our confidence in shipping features that impact our customers and our businesses.

Table of contents

Ready to go from knowledge to action?

Keep reading

We use cookies to personalize and deliver appropriate content. By clicking "Accept" you agree to our terms.

Product management

Testing Plan

Explore real examples of Testing Plans

Learn how the best operators in tech think about testing plans. explore 11 examples of testing plans so you never have to start from scratch..

Updated September 27, 2024

What is a testing plan?

A testing plan is a strategic document outlining how to evaluate software through various tests (unit, integration, system, acceptance), specifying scope, schedule, and success criteria. Reforge can enhance the development and execution of testing plans with expert resources and tools that streamline testing processes. Our support helps define clear roles, responsibilities, and the use of appropriate environments and tools, ensuring thorough bug and performance issue evaluations.

Popular Testing Plan examples

Explore 10 most popular Testing Plans from top companies.

Experiment Doc Template at Patreon

by Adam Fishman , Created as VP Product and Growth @ Patreon

My team at Patreon used this doc to help product teams plan experiments more thoroughly and avoid common testing mishaps. It also served as a discussion doc and alignment vehicle for product, engineering, and data science.

- Adam's team at Patreon used this doc to plan experiments and align product, engineering, and data science teams.

- The doc includes sections for stating the problem, hypothesis, and what will be learned from the experiment.

- It also includes an experiment plan section with details on variants, lift, risks, and post-experiment decisions.

Experiment design & documentation template at Slack

by Fareed Mosavat , Created as Director of Product, Lifecycle @ Slack

At Slack, I used this experiment design and documentation template to get the team to openly document and pressure test the goals of any planned experiment, increasing the team’s sophistication around experimentation.

- Fareed used this experiment design and documentation template at Slack to increase the team's sophistication around experimentation.

- The template includes sections for product brief goals, audience, variants, expected outcomes and key metrics, results and takeaways, and appendix.

- Using this will allow a team to openly document and pressure test the goals of any planned experiment.

App Navigation Experiment Plan at UberEats

by Damian Wolfgram , Created as Lead Product Designer - Uber Eats @ Uber

An experimentation doc for a refresh of Uber’s main navigation to support the inclusion of Uber Eats at the time. Uber had millions of users and an extensive experimentation platform, but we did experience a lot of tension in changing the launch view from the initial ridesharing screen.

- As a designer at Uber Eats, Damian contributed to the Nava project, addressing the challenge of incorporating different products and user goals into the Uber app.

- The experiment aimed to enhance the initial entry point for Uber Eats within the Uber app, allowing users to seamlessly order and receive Eats on the go.

- Uber's sophisticated experiment platform, with millions of users globally, facilitated comprehensive testing, analysis, and optimization of the Nava project.

- Over time, the Nava project evolved, with continual optimizations, showcasing changes in the app's interface and navigation strategy.

Experiment Launch Checklist from Alexey Komissarouk

by Alexey Komissarouk , Created as Head of Growth Engineering @ MasterClass

In this sample experiment launch checklist, I lay out how the team can avoid common pitfalls before even starting their experiment.

- In this sample experiment launch checklist, Alexey lays out how a team can avoid common pitfalls before even starting their experiment.

- Alexey appreciated the low ego culture at Masterclass where PMs manually QA'd experiments.

- Masterclass set a high bar for experiment launches, which Alexey noticed when consulting.

- A company-specific experiment launch checklist can be developed by reviewing postmortems and considering different conditions and segments.

Amazon 3P seller-business subscription program QA test plan

by Damilola Oyedele Onwah , Created as Senior Product Manager - Amazon Business @ Amazon

This was a QA testing plan for the 3P Seller-Business subscription program before and after initial launch. Our goal was to ensure all technical components were working as intended.

- Damilola led the QA testing for Amazon's 3P Seller-Business subscription program.

- The plan aimed to ensure all product requirements were met and technical components functioned properly before launch.

- Testing was phased, focusing first on immediate features for Business Customers and 3P Sellers, then on backend changes and tech debt.

- The goal was to provide a seamless experience for both Amazon 3P Sellers and Business Customers post-launch.

Constriction A/B test with promo code by Andrey Andreev

by Andrey Andreev , Created as Head of eMerchandising @ METRO Russia

An overview of an A/B test utilizing a promo code and the resulting business impact from our experiment.

- Andrey conducted an A/B test to assess the impact of presenting promo codes to new users, aiming to avoid traditional methods like banners.

- The experiment was inspired by an Ocado case study, focusing on increasing average revenue per user without exceeding budget.

- The objective was to find more effective ways to engage new consumers and encourage product or service trials.

- The test reflects the importance of innovative promotional strategies in enhancing business outcomes.

A/B test with UI change in search bar at Metro

Learn how Metro’s collaboration with REO enhanced search interactions and conversion rates through strategic design choices and Gravity Field's tailored features.

- Andrey led the A/B test at Metro to improve search bar functionality.

- The project focused on UI enhancements through collaboration with REO.

- Strategic design choices aimed to boost user interactions and conversions.

- Gravity Field provided tailored features to optimize the search experience.

Sticky header A/B test hypothesis

In a product manager role exercise, proposing an AB test aimed at enhancing user experience and boosting revenue through conversion.

- Andrey proposed an A/B test to improve user experience and boost conversion for increased revenue.

- The test used the ICE technique, scoring highly for potential impact, success chance, and execution simplicity.

- Initial results showed minor metric improvements but lacked statistical significance, indicating a need for larger sample sizes or extended testing periods.

- Future tests should focus on early journey metrics like CTR and PLP transitions for quicker insights and better data-driven decisions.

A/B test report for homepage loop banners by Andrey Andreev

A report for a homepage banner A/B test for a client. Using hypothetical numbers, this report demonstrates how we execute and report a critical split test for our clients.

- Andrey conducted an A/B test report for a client's homepage banner, using hypothetical data to illustrate the process.

- The test prioritized financial impact (ARPU) due to limited resources, pushing the experiment to 100% on the platform for decision-making.

- Andrey highlighted the development of a culture of experimentation, with a current rate of 12-16 tests launched monthly.

- Despite resource constraints limiting the ability to test all modifications, Andrey emphasizes the importance of experimentation to identify impactful ideas.

Writing effective prompts for test plans and code

by Roman Gun , Created as Vice President, Product @ Zeta Global

LLMs can be wonderful tools when it comes to helping PMs think more like engineers.

- Roman explains how LLMs help product managers think like engineers, enhancing their ability to create detailed prompts for test plans, code, and Gherkin scenarios.

- The guide outlines steps for identifying needs, gathering information, drafting and refining prompts, and finalizing comprehensive testing instructions.

- Roman emphasizes understanding technical details, using multimodal inputs, and involving humans in the QA process for effective testing.

- Utilizing LLMs for QA bridges knowledge gaps, improves collaboration, and ensures robust, scalable testing processes.

View all 11 examples of Testing Plans.

Other popular Testing Plans

Explore the full catalog of Testing Plan resources to get inspired

Andrey Andreev, Created as Head of eMerchandising @ METRO Russia

Roman Gun, Created as Vice President, Product @ Zeta Global

Yuriy Timen, Created while an advisor

A comprehensive plan to test campaign performance using YouTube in addition to other paid channels. This was unique in that it included working with a prominent influencer on a health-related brand, which is highly-regulated.

View all 11 examples of Testing Plans

Reforge has everything you need to get more done, faster

Explore artifacts of all kinds

Browse other popular categories to get ideas for your own work

Generate data insights

Prepare instrumentation for new product launch

Mitigate delivery risk for big bets

Build an experimentation roadmap

Pci compliance, topics related to testing plan.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- A Quick Guide to Experimental Design | 5 Steps & Examples

A Quick Guide to Experimental Design | 5 Steps & Examples

Published on 11 April 2022 by Rebecca Bevans . Revised on 5 December 2022.

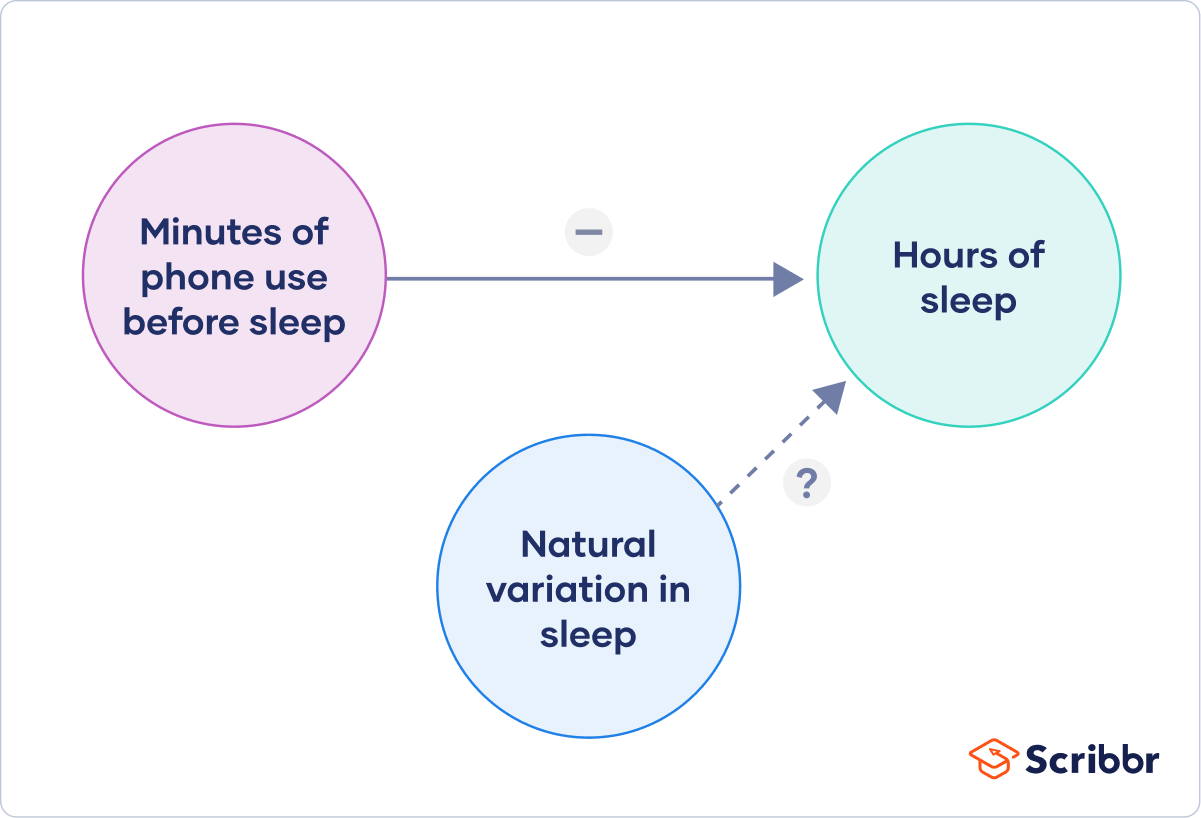

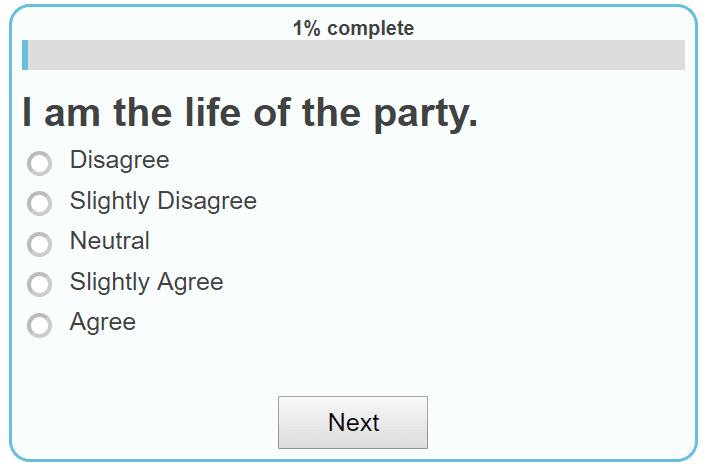

Experiments are used to study causal relationships . You manipulate one or more independent variables and measure their effect on one or more dependent variables.

Experimental design means creating a set of procedures to systematically test a hypothesis . A good experimental design requires a strong understanding of the system you are studying.

There are five key steps in designing an experiment:

- Consider your variables and how they are related

- Write a specific, testable hypothesis

- Design experimental treatments to manipulate your independent variable

- Assign subjects to groups, either between-subjects or within-subjects

- Plan how you will measure your dependent variable

For valid conclusions, you also need to select a representative sample and control any extraneous variables that might influence your results. If if random assignment of participants to control and treatment groups is impossible, unethical, or highly difficult, consider an observational study instead.

Table of contents

Step 1: define your variables, step 2: write your hypothesis, step 3: design your experimental treatments, step 4: assign your subjects to treatment groups, step 5: measure your dependent variable, frequently asked questions about experimental design.

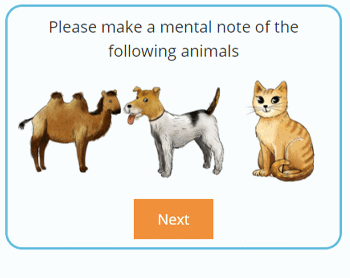

You should begin with a specific research question . We will work with two research question examples, one from health sciences and one from ecology:

To translate your research question into an experimental hypothesis, you need to define the main variables and make predictions about how they are related.

Start by simply listing the independent and dependent variables .

Then you need to think about possible extraneous and confounding variables and consider how you might control them in your experiment.

Finally, you can put these variables together into a diagram. Use arrows to show the possible relationships between variables and include signs to show the expected direction of the relationships.

Here we predict that increasing temperature will increase soil respiration and decrease soil moisture, while decreasing soil moisture will lead to decreased soil respiration.

Prevent plagiarism, run a free check.

Now that you have a strong conceptual understanding of the system you are studying, you should be able to write a specific, testable hypothesis that addresses your research question.

The next steps will describe how to design a controlled experiment . In a controlled experiment, you must be able to:

- Systematically and precisely manipulate the independent variable(s).

- Precisely measure the dependent variable(s).

- Control any potential confounding variables.

If your study system doesn’t match these criteria, there are other types of research you can use to answer your research question.

How you manipulate the independent variable can affect the experiment’s external validity – that is, the extent to which the results can be generalised and applied to the broader world.

First, you may need to decide how widely to vary your independent variable.

- just slightly above the natural range for your study region.

- over a wider range of temperatures to mimic future warming.

- over an extreme range that is beyond any possible natural variation.

Second, you may need to choose how finely to vary your independent variable. Sometimes this choice is made for you by your experimental system, but often you will need to decide, and this will affect how much you can infer from your results.

- a categorical variable : either as binary (yes/no) or as levels of a factor (no phone use, low phone use, high phone use).

- a continuous variable (minutes of phone use measured every night).

How you apply your experimental treatments to your test subjects is crucial for obtaining valid and reliable results.

First, you need to consider the study size : how many individuals will be included in the experiment? In general, the more subjects you include, the greater your experiment’s statistical power , which determines how much confidence you can have in your results.

Then you need to randomly assign your subjects to treatment groups . Each group receives a different level of the treatment (e.g. no phone use, low phone use, high phone use).

You should also include a control group , which receives no treatment. The control group tells us what would have happened to your test subjects without any experimental intervention.

When assigning your subjects to groups, there are two main choices you need to make:

- A completely randomised design vs a randomised block design .

- A between-subjects design vs a within-subjects design .

Randomisation

An experiment can be completely randomised or randomised within blocks (aka strata):

- In a completely randomised design , every subject is assigned to a treatment group at random.

- In a randomised block design (aka stratified random design), subjects are first grouped according to a characteristic they share, and then randomly assigned to treatments within those groups.

Sometimes randomisation isn’t practical or ethical , so researchers create partially-random or even non-random designs. An experimental design where treatments aren’t randomly assigned is called a quasi-experimental design .

Between-subjects vs within-subjects

In a between-subjects design (also known as an independent measures design or classic ANOVA design), individuals receive only one of the possible levels of an experimental treatment.

In medical or social research, you might also use matched pairs within your between-subjects design to make sure that each treatment group contains the same variety of test subjects in the same proportions.

In a within-subjects design (also known as a repeated measures design), every individual receives each of the experimental treatments consecutively, and their responses to each treatment are measured.

Within-subjects or repeated measures can also refer to an experimental design where an effect emerges over time, and individual responses are measured over time in order to measure this effect as it emerges.

Counterbalancing (randomising or reversing the order of treatments among subjects) is often used in within-subjects designs to ensure that the order of treatment application doesn’t influence the results of the experiment.

Finally, you need to decide how you’ll collect data on your dependent variable outcomes. You should aim for reliable and valid measurements that minimise bias or error.

Some variables, like temperature, can be objectively measured with scientific instruments. Others may need to be operationalised to turn them into measurable observations.

- Ask participants to record what time they go to sleep and get up each day.

- Ask participants to wear a sleep tracker.

How precisely you measure your dependent variable also affects the kinds of statistical analysis you can use on your data.

Experiments are always context-dependent, and a good experimental design will take into account all of the unique considerations of your study system to produce information that is both valid and relevant to your research question.

Experimental designs are a set of procedures that you plan in order to examine the relationship between variables that interest you.

To design a successful experiment, first identify:

- A testable hypothesis

- One or more independent variables that you will manipulate

- One or more dependent variables that you will measure

When designing the experiment, first decide:

- How your variable(s) will be manipulated

- How you will control for any potential confounding or lurking variables

- How many subjects you will include

- How you will assign treatments to your subjects

The key difference between observational studies and experiments is that, done correctly, an observational study will never influence the responses or behaviours of participants. Experimental designs will have a treatment condition applied to at least a portion of participants.

A confounding variable , also called a confounder or confounding factor, is a third variable in a study examining a potential cause-and-effect relationship.

A confounding variable is related to both the supposed cause and the supposed effect of the study. It can be difficult to separate the true effect of the independent variable from the effect of the confounding variable.

In your research design , it’s important to identify potential confounding variables and plan how you will reduce their impact.

In a between-subjects design , every participant experiences only one condition, and researchers assess group differences between participants in various conditions.

In a within-subjects design , each participant experiences all conditions, and researchers test the same participants repeatedly for differences between conditions.

The word ‘between’ means that you’re comparing different conditions between groups, while the word ‘within’ means you’re comparing different conditions within the same group.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Bevans, R. (2022, December 05). A Quick Guide to Experimental Design | 5 Steps & Examples. Scribbr. Retrieved 1 November 2024, from https://www.scribbr.co.uk/research-methods/guide-to-experimental-design/

Is this article helpful?

Rebecca Bevans

- Ayoa Ultimate (with AI)

- Help Center

- How to Mind Map

Templates Menu

- Templates Home

- Idea Generation

- Mind Mapping

- Problem Solving

- Retrospectives & Feedback

- Business Strategy

- Design Thinking

- Managing Teams

- Remote Working

- Prioritization

- Product Management

- Project Management

- Specific Framework

- Task Management

- To Do Lists

- Design & Creative

- Development

- Human Resources

- Marketing & Sales

- The Creative Thinking Handbook

- Marshall Goldsmith

Experiment Plan Template

Helpful for Education Planning .

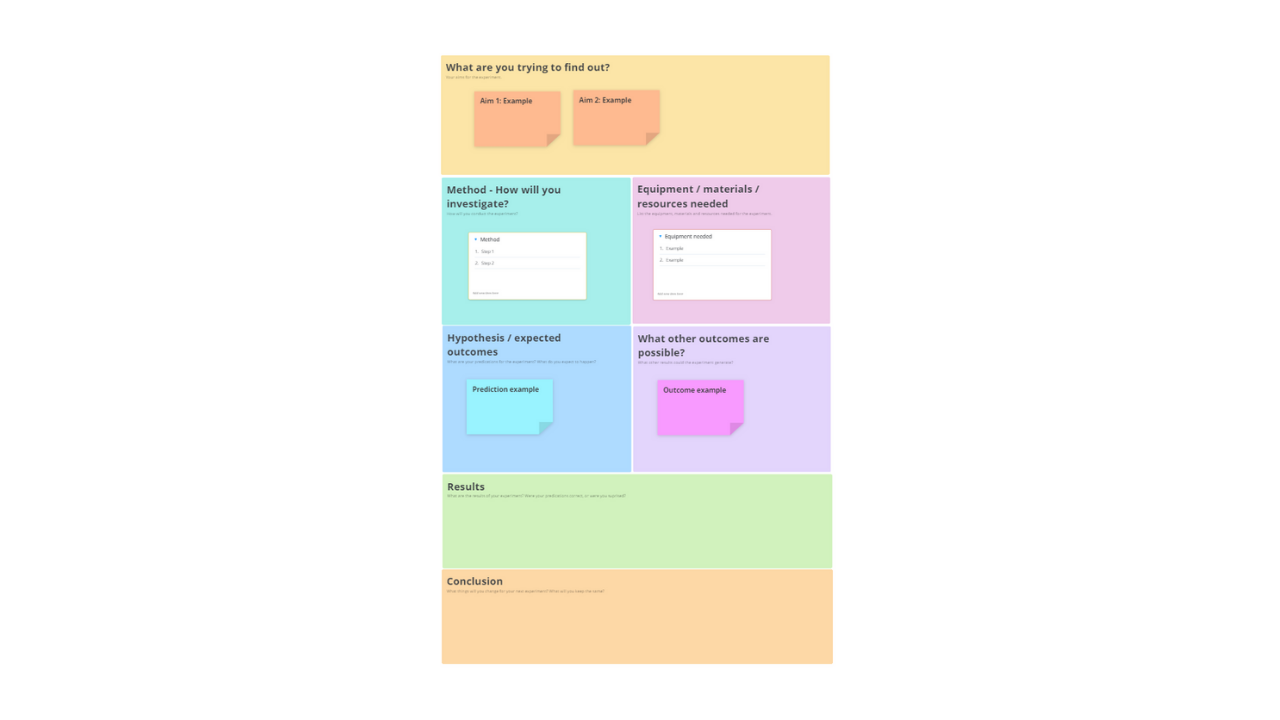

Ayoa’s Experiment Plan template will allow you to effectively plan any scientific experiment, as well as record your observations and results in a clear and concise format. Using this template will allow you to think critically about your experiment plan and analyze your results to help aid your learning.

Ready to get started with this template? It’s ready and waiting in Ayoa! Create your account , then simply open the app , select ‘create whiteboard’ from the homepage and choose this template from the library.

What is an experiment plan?

An experiment plan is commonly used by students throughout their education, particularly during science classes. An experiment plan is put together prior to conducting experiments to allow students to assess what they aim to achieve, as well as record their predictions, outcomes and any useful information regarding the preparation of the experiment.

When planning a science experiment, it’s important to consider the following questions:

What are you trying to find out?

How will you investigate this?

What are your predictions for the experiment?

What other outcomes do you believe are possible?

Why use our Experiment Plan template?

Our Experiment Plan template includes dedicated sections for students to record their answers and take notes when planning their experiment. There’s also space for students to record their results and evaluate their conclusion, which they can fill in once the experiment is over.

Using our Experiment Plan template will help students to think practically about all the elements that need to be considered before conducting an experiment, as well as think critically about what they hope to achieve and what they can learn from the results it produces. The template will also help them learn about the scientific method behind conducting an experiment and how to conduct a successful and fair investigation.

How to use our Experiment Plan template

Students can use our Experiment Plan template before conducting their own experiments, however, it can also be used by teachers to share with their students when working on a science experiment as a class. This will ensure their students can work through the same process.

To access the template, sign up to Ayoa . Once you've signed up, navigate to the homepage to create a new whiteboard , mind map or task board and choose this template from the library .

What are you trying to find out? Begin by evaluating what you hope to achieve from conducting the experiment; what are you trying to find out? Using the sticky notes, lists or text options available in the template, add your experiment aims to this section.

Method – How will you investigate? In this section, explain how you will conduct the experiment. This will be useful for you to refer to during the experiment itself and will help you understand if it had an impact on your end results.

Equipment / materials / resources needed. Here, make a list of all the equipment you’ll need to successfully conduct your experiment. By using lists as shown in the template, you’ll be able to easily add new items to your list; easily drag and drop list items to reorder them to represent their priority.

Hypothesis / expected outcomes. During this planning process, think about what your predictions for the experiment are; what do you expect to happen? Why do you expect this to happen? Making a note of these predictions will help you to better evaluate your results at the end of your experiment.

What other outcomes are possible? It’s also important to consider what other results the experiment could generate. You can then compare these possible outcomes (alongside your predictions) with your final results to see if you can spot any patterns or unexpected outcomes.

Results. Now that you’ve planned your experiment and set out your expectations, it’s time to conduct your experiment. Once your experiment is complete, return to your template and add your answers to the ‘Results’ section. Here, make a note of all the results you found and whether your predictions materialized.

Conclusion. In this final section, think more critically about how your experiment went. Did everything go as expected, or were you surprised by your results? Were there elements of your planning process that had an impact on your results? If so, what would you change (or keep the same) for future experiments? Add all your ideas to the ‘Conclusion’ section of the template.

The smarter way to work

Ayoa is an all-in-one online whiteboard where you can brainstorm ideas, work together and get things done. View website

Submit your template

Created a template that you think others might find useful?

Created a template that you think others might find useful? Submit your template by following the steps below:

Open the board you would like to submit as a template. Click the settings (cog) icon in the top right hand corner and select ‘Create Template.’ Name the template and click ‘Create Template’ again to confirm.

Once your new template has opened, click the settings icon once again and select ‘Share Template’, ‘Public Share’ and then ‘Yes’. Copy the public sharing URL that is generated.

Please note that by making your template public, anyone with access to this link will be able to view your template.

Email your template URL along with your name, the template name and a short description of your template to [email protected].

19+ Experimental Design Examples (Methods + Types)

Ever wondered how scientists discover new medicines, psychologists learn about behavior, or even how marketers figure out what kind of ads you like? Well, they all have something in common: they use a special plan or recipe called an "experimental design."

Imagine you're baking cookies. You can't just throw random amounts of flour, sugar, and chocolate chips into a bowl and hope for the best. You follow a recipe, right? Scientists and researchers do something similar. They follow a "recipe" called an experimental design to make sure their experiments are set up in a way that the answers they find are meaningful and reliable.

Experimental design is the roadmap researchers use to answer questions. It's a set of rules and steps that researchers follow to collect information, or "data," in a way that is fair, accurate, and makes sense.

Long ago, people didn't have detailed game plans for experiments. They often just tried things out and saw what happened. But over time, people got smarter about this. They started creating structured plans—what we now call experimental designs—to get clearer, more trustworthy answers to their questions.

In this article, we'll take you on a journey through the world of experimental designs. We'll talk about the different types, or "flavors," of experimental designs, where they're used, and even give you a peek into how they came to be.

What Is Experimental Design?

Alright, before we dive into the different types of experimental designs, let's get crystal clear on what experimental design actually is.

Imagine you're a detective trying to solve a mystery. You need clues, right? Well, in the world of research, experimental design is like the roadmap that helps you find those clues. It's like the game plan in sports or the blueprint when you're building a house. Just like you wouldn't start building without a good blueprint, researchers won't start their studies without a strong experimental design.

So, why do we need experimental design? Think about baking a cake. If you toss ingredients into a bowl without measuring, you'll end up with a mess instead of a tasty dessert.

Similarly, in research, if you don't have a solid plan, you might get confusing or incorrect results. A good experimental design helps you ask the right questions ( think critically ), decide what to measure ( come up with an idea ), and figure out how to measure it (test it). It also helps you consider things that might mess up your results, like outside influences you hadn't thought of.

For example, let's say you want to find out if listening to music helps people focus better. Your experimental design would help you decide things like: Who are you going to test? What kind of music will you use? How will you measure focus? And, importantly, how will you make sure that it's really the music affecting focus and not something else, like the time of day or whether someone had a good breakfast?

In short, experimental design is the master plan that guides researchers through the process of collecting data, so they can answer questions in the most reliable way possible. It's like the GPS for the journey of discovery!

History of Experimental Design

Around 350 BCE, people like Aristotle were trying to figure out how the world works, but they mostly just thought really hard about things. They didn't test their ideas much. So while they were super smart, their methods weren't always the best for finding out the truth.

Fast forward to the Renaissance (14th to 17th centuries), a time of big changes and lots of curiosity. People like Galileo started to experiment by actually doing tests, like rolling balls down inclined planes to study motion. Galileo's work was cool because he combined thinking with doing. He'd have an idea, test it, look at the results, and then think some more. This approach was a lot more reliable than just sitting around and thinking.

Now, let's zoom ahead to the 18th and 19th centuries. This is when people like Francis Galton, an English polymath, started to get really systematic about experimentation. Galton was obsessed with measuring things. Seriously, he even tried to measure how good-looking people were ! His work helped create the foundations for a more organized approach to experiments.

Next stop: the early 20th century. Enter Ronald A. Fisher , a brilliant British statistician. Fisher was a game-changer. He came up with ideas that are like the bread and butter of modern experimental design.

Fisher invented the concept of the " control group "—that's a group of people or things that don't get the treatment you're testing, so you can compare them to those who do. He also stressed the importance of " randomization ," which means assigning people or things to different groups by chance, like drawing names out of a hat. This makes sure the experiment is fair and the results are trustworthy.

Around the same time, American psychologists like John B. Watson and B.F. Skinner were developing " behaviorism ." They focused on studying things that they could directly observe and measure, like actions and reactions.

Skinner even built boxes—called Skinner Boxes —to test how animals like pigeons and rats learn. Their work helped shape how psychologists design experiments today. Watson performed a very controversial experiment called The Little Albert experiment that helped describe behaviour through conditioning—in other words, how people learn to behave the way they do.

In the later part of the 20th century and into our time, computers have totally shaken things up. Researchers now use super powerful software to help design their experiments and crunch the numbers.

With computers, they can simulate complex experiments before they even start, which helps them predict what might happen. This is especially helpful in fields like medicine, where getting things right can be a matter of life and death.

Also, did you know that experimental designs aren't just for scientists in labs? They're used by people in all sorts of jobs, like marketing, education, and even video game design! Yes, someone probably ran an experiment to figure out what makes a game super fun to play.

So there you have it—a quick tour through the history of experimental design, from Aristotle's deep thoughts to Fisher's groundbreaking ideas, and all the way to today's computer-powered research. These designs are the recipes that help people from all walks of life find answers to their big questions.

Key Terms in Experimental Design

Before we dig into the different types of experimental designs, let's get comfy with some key terms. Understanding these terms will make it easier for us to explore the various types of experimental designs that researchers use to answer their big questions.

Independent Variable : This is what you change or control in your experiment to see what effect it has. Think of it as the "cause" in a cause-and-effect relationship. For example, if you're studying whether different types of music help people focus, the kind of music is the independent variable.

Dependent Variable : This is what you're measuring to see the effect of your independent variable. In our music and focus experiment, how well people focus is the dependent variable—it's what "depends" on the kind of music played.

Control Group : This is a group of people who don't get the special treatment or change you're testing. They help you see what happens when the independent variable is not applied. If you're testing whether a new medicine works, the control group would take a fake pill, called a placebo , instead of the real medicine.

Experimental Group : This is the group that gets the special treatment or change you're interested in. Going back to our medicine example, this group would get the actual medicine to see if it has any effect.

Randomization : This is like shaking things up in a fair way. You randomly put people into the control or experimental group so that each group is a good mix of different kinds of people. This helps make the results more reliable.

Sample : This is the group of people you're studying. They're a "sample" of a larger group that you're interested in. For instance, if you want to know how teenagers feel about a new video game, you might study a sample of 100 teenagers.

Bias : This is anything that might tilt your experiment one way or another without you realizing it. Like if you're testing a new kind of dog food and you only test it on poodles, that could create a bias because maybe poodles just really like that food and other breeds don't.

Data : This is the information you collect during the experiment. It's like the treasure you find on your journey of discovery!

Replication : This means doing the experiment more than once to make sure your findings hold up. It's like double-checking your answers on a test.

Hypothesis : This is your educated guess about what will happen in the experiment. It's like predicting the end of a movie based on the first half.

Steps of Experimental Design

Alright, let's say you're all fired up and ready to run your own experiment. Cool! But where do you start? Well, designing an experiment is a bit like planning a road trip. There are some key steps you've got to take to make sure you reach your destination. Let's break it down:

- Ask a Question : Before you hit the road, you've got to know where you're going. Same with experiments. You start with a question you want to answer, like "Does eating breakfast really make you do better in school?"

- Do Some Homework : Before you pack your bags, you look up the best places to visit, right? In science, this means reading up on what other people have already discovered about your topic.

- Form a Hypothesis : This is your educated guess about what you think will happen. It's like saying, "I bet this route will get us there faster."

- Plan the Details : Now you decide what kind of car you're driving (your experimental design), who's coming with you (your sample), and what snacks to bring (your variables).

- Randomization : Remember, this is like shuffling a deck of cards. You want to mix up who goes into your control and experimental groups to make sure it's a fair test.

- Run the Experiment : Finally, the rubber hits the road! You carry out your plan, making sure to collect your data carefully.

- Analyze the Data : Once the trip's over, you look at your photos and decide which ones are keepers. In science, this means looking at your data to see what it tells you.

- Draw Conclusions : Based on your data, did you find an answer to your question? This is like saying, "Yep, that route was faster," or "Nope, we hit a ton of traffic."

- Share Your Findings : After a great trip, you want to tell everyone about it, right? Scientists do the same by publishing their results so others can learn from them.

- Do It Again? : Sometimes one road trip just isn't enough. In the same way, scientists often repeat their experiments to make sure their findings are solid.

So there you have it! Those are the basic steps you need to follow when you're designing an experiment. Each step helps make sure that you're setting up a fair and reliable way to find answers to your big questions.

Let's get into examples of experimental designs.

1) True Experimental Design

In the world of experiments, the True Experimental Design is like the superstar quarterback everyone talks about. Born out of the early 20th-century work of statisticians like Ronald A. Fisher, this design is all about control, precision, and reliability.

Researchers carefully pick an independent variable to manipulate (remember, that's the thing they're changing on purpose) and measure the dependent variable (the effect they're studying). Then comes the magic trick—randomization. By randomly putting participants into either the control or experimental group, scientists make sure their experiment is as fair as possible.

No sneaky biases here!

True Experimental Design Pros

The pros of True Experimental Design are like the perks of a VIP ticket at a concert: you get the best and most trustworthy results. Because everything is controlled and randomized, you can feel pretty confident that the results aren't just a fluke.

True Experimental Design Cons

However, there's a catch. Sometimes, it's really tough to set up these experiments in a real-world situation. Imagine trying to control every single detail of your day, from the food you eat to the air you breathe. Not so easy, right?

True Experimental Design Uses

The fields that get the most out of True Experimental Designs are those that need super reliable results, like medical research.

When scientists were developing COVID-19 vaccines, they used this design to run clinical trials. They had control groups that received a placebo (a harmless substance with no effect) and experimental groups that got the actual vaccine. Then they measured how many people in each group got sick. By comparing the two, they could say, "Yep, this vaccine works!"

So next time you read about a groundbreaking discovery in medicine or technology, chances are a True Experimental Design was the VIP behind the scenes, making sure everything was on point. It's been the go-to for rigorous scientific inquiry for nearly a century, and it's not stepping off the stage anytime soon.

2) Quasi-Experimental Design

So, let's talk about the Quasi-Experimental Design. Think of this one as the cool cousin of True Experimental Design. It wants to be just like its famous relative, but it's a bit more laid-back and flexible. You'll find quasi-experimental designs when it's tricky to set up a full-blown True Experimental Design with all the bells and whistles.

Quasi-experiments still play with an independent variable, just like their stricter cousins. The big difference? They don't use randomization. It's like wanting to divide a bag of jelly beans equally between your friends, but you can't quite do it perfectly.

In real life, it's often not possible or ethical to randomly assign people to different groups, especially when dealing with sensitive topics like education or social issues. And that's where quasi-experiments come in.

Quasi-Experimental Design Pros

Even though they lack full randomization, quasi-experimental designs are like the Swiss Army knives of research: versatile and practical. They're especially popular in fields like education, sociology, and public policy.

For instance, when researchers wanted to figure out if the Head Start program , aimed at giving young kids a "head start" in school, was effective, they used a quasi-experimental design. They couldn't randomly assign kids to go or not go to preschool, but they could compare kids who did with kids who didn't.

Quasi-Experimental Design Cons

Of course, quasi-experiments come with their own bag of pros and cons. On the plus side, they're easier to set up and often cheaper than true experiments. But the flip side is that they're not as rock-solid in their conclusions. Because the groups aren't randomly assigned, there's always that little voice saying, "Hey, are we missing something here?"

Quasi-Experimental Design Uses

Quasi-Experimental Design gained traction in the mid-20th century. Researchers were grappling with real-world problems that didn't fit neatly into a laboratory setting. Plus, as society became more aware of ethical considerations, the need for flexible designs increased. So, the quasi-experimental approach was like a breath of fresh air for scientists wanting to study complex issues without a laundry list of restrictions.

In short, if True Experimental Design is the superstar quarterback, Quasi-Experimental Design is the versatile player who can adapt and still make significant contributions to the game.

3) Pre-Experimental Design

Now, let's talk about the Pre-Experimental Design. Imagine it as the beginner's skateboard you get before you try out for all the cool tricks. It has wheels, it rolls, but it's not built for the professional skatepark.

Similarly, pre-experimental designs give researchers a starting point. They let you dip your toes in the water of scientific research without diving in head-first.

So, what's the deal with pre-experimental designs?

Pre-Experimental Designs are the basic, no-frills versions of experiments. Researchers still mess around with an independent variable and measure a dependent variable, but they skip over the whole randomization thing and often don't even have a control group.

It's like baking a cake but forgetting the frosting and sprinkles; you'll get some results, but they might not be as complete or reliable as you'd like.

Pre-Experimental Design Pros

Why use such a simple setup? Because sometimes, you just need to get the ball rolling. Pre-experimental designs are great for quick-and-dirty research when you're short on time or resources. They give you a rough idea of what's happening, which you can use to plan more detailed studies later.

A good example of this is early studies on the effects of screen time on kids. Researchers couldn't control every aspect of a child's life, but they could easily ask parents to track how much time their kids spent in front of screens and then look for trends in behavior or school performance.

Pre-Experimental Design Cons

But here's the catch: pre-experimental designs are like that first draft of an essay. It helps you get your ideas down, but you wouldn't want to turn it in for a grade. Because these designs lack the rigorous structure of true or quasi-experimental setups, they can't give you rock-solid conclusions. They're more like clues or signposts pointing you in a certain direction.

Pre-Experimental Design Uses

This type of design became popular in the early stages of various scientific fields. Researchers used them to scratch the surface of a topic, generate some initial data, and then decide if it's worth exploring further. In other words, pre-experimental designs were the stepping stones that led to more complex, thorough investigations.

So, while Pre-Experimental Design may not be the star player on the team, it's like the practice squad that helps everyone get better. It's the starting point that can lead to bigger and better things.

4) Factorial Design

Now, buckle up, because we're moving into the world of Factorial Design, the multi-tasker of the experimental universe.

Imagine juggling not just one, but multiple balls in the air—that's what researchers do in a factorial design.

In Factorial Design, researchers are not satisfied with just studying one independent variable. Nope, they want to study two or more at the same time to see how they interact.

It's like cooking with several spices to see how they blend together to create unique flavors.

Factorial Design became the talk of the town with the rise of computers. Why? Because this design produces a lot of data, and computers are the number crunchers that help make sense of it all. So, thanks to our silicon friends, researchers can study complicated questions like, "How do diet AND exercise together affect weight loss?" instead of looking at just one of those factors.

Factorial Design Pros

This design's main selling point is its ability to explore interactions between variables. For instance, maybe a new study drug works really well for young people but not so great for older adults. A factorial design could reveal that age is a crucial factor, something you might miss if you only studied the drug's effectiveness in general. It's like being a detective who looks for clues not just in one room but throughout the entire house.

Factorial Design Cons

However, factorial designs have their own bag of challenges. First off, they can be pretty complicated to set up and run. Imagine coordinating a four-way intersection with lots of cars coming from all directions—you've got to make sure everything runs smoothly, or you'll end up with a traffic jam. Similarly, researchers need to carefully plan how they'll measure and analyze all the different variables.

Factorial Design Uses

Factorial designs are widely used in psychology to untangle the web of factors that influence human behavior. They're also popular in fields like marketing, where companies want to understand how different aspects like price, packaging, and advertising influence a product's success.

And speaking of success, the factorial design has been a hit since statisticians like Ronald A. Fisher (yep, him again!) expanded on it in the early-to-mid 20th century. It offered a more nuanced way of understanding the world, proving that sometimes, to get the full picture, you've got to juggle more than one ball at a time.

So, if True Experimental Design is the quarterback and Quasi-Experimental Design is the versatile player, Factorial Design is the strategist who sees the entire game board and makes moves accordingly.

5) Longitudinal Design

Alright, let's take a step into the world of Longitudinal Design. Picture it as the grand storyteller, the kind who doesn't just tell you about a single event but spins an epic tale that stretches over years or even decades. This design isn't about quick snapshots; it's about capturing the whole movie of someone's life or a long-running process.

You know how you might take a photo every year on your birthday to see how you've changed? Longitudinal Design is kind of like that, but for scientific research.

With Longitudinal Design, instead of measuring something just once, researchers come back again and again, sometimes over many years, to see how things are going. This helps them understand not just what's happening, but why it's happening and how it changes over time.

This design really started to shine in the latter half of the 20th century, when researchers began to realize that some questions can't be answered in a hurry. Think about studies that look at how kids grow up, or research on how a certain medicine affects you over a long period. These aren't things you can rush.

The famous Framingham Heart Study , started in 1948, is a prime example. It's been studying heart health in a small town in Massachusetts for decades, and the findings have shaped what we know about heart disease.

Longitudinal Design Pros

So, what's to love about Longitudinal Design? First off, it's the go-to for studying change over time, whether that's how people age or how a forest recovers from a fire.

Longitudinal Design Cons

But it's not all sunshine and rainbows. Longitudinal studies take a lot of patience and resources. Plus, keeping track of participants over many years can be like herding cats—difficult and full of surprises.

Longitudinal Design Uses

Despite these challenges, longitudinal studies have been key in fields like psychology, sociology, and medicine. They provide the kind of deep, long-term insights that other designs just can't match.

So, if the True Experimental Design is the superstar quarterback, and the Quasi-Experimental Design is the flexible athlete, then the Factorial Design is the strategist, and the Longitudinal Design is the wise elder who has seen it all and has stories to tell.

6) Cross-Sectional Design

Now, let's flip the script and talk about Cross-Sectional Design, the polar opposite of the Longitudinal Design. If Longitudinal is the grand storyteller, think of Cross-Sectional as the snapshot photographer. It captures a single moment in time, like a selfie that you take to remember a fun day. Researchers using this design collect all their data at one point, providing a kind of "snapshot" of whatever they're studying.

In a Cross-Sectional Design, researchers look at multiple groups all at the same time to see how they're different or similar.

This design rose to popularity in the mid-20th century, mainly because it's so quick and efficient. Imagine wanting to know how people of different ages feel about a new video game. Instead of waiting for years to see how opinions change, you could just ask people of all ages what they think right now. That's Cross-Sectional Design for you—fast and straightforward.

You'll find this type of research everywhere from marketing studies to healthcare. For instance, you might have heard about surveys asking people what they think about a new product or political issue. Those are usually cross-sectional studies, aimed at getting a quick read on public opinion.

Cross-Sectional Design Pros

So, what's the big deal with Cross-Sectional Design? Well, it's the go-to when you need answers fast and don't have the time or resources for a more complicated setup.

Cross-Sectional Design Cons

Remember, speed comes with trade-offs. While you get your results quickly, those results are stuck in time. They can't tell you how things change or why they're changing, just what's happening right now.

Cross-Sectional Design Uses

Also, because they're so quick and simple, cross-sectional studies often serve as the first step in research. They give scientists an idea of what's going on so they can decide if it's worth digging deeper. In that way, they're a bit like a movie trailer, giving you a taste of the action to see if you're interested in seeing the whole film.

So, in our lineup of experimental designs, if True Experimental Design is the superstar quarterback and Longitudinal Design is the wise elder, then Cross-Sectional Design is like the speedy running back—fast, agile, but not designed for long, drawn-out plays.

7) Correlational Design

Next on our roster is the Correlational Design, the keen observer of the experimental world. Imagine this design as the person at a party who loves people-watching. They don't interfere or get involved; they just observe and take mental notes about what's going on.

In a correlational study, researchers don't change or control anything; they simply observe and measure how two variables relate to each other.

The correlational design has roots in the early days of psychology and sociology. Pioneers like Sir Francis Galton used it to study how qualities like intelligence or height could be related within families.

This design is all about asking, "Hey, when this thing happens, does that other thing usually happen too?" For example, researchers might study whether students who have more study time get better grades or whether people who exercise more have lower stress levels.

One of the most famous correlational studies you might have heard of is the link between smoking and lung cancer. Back in the mid-20th century, researchers started noticing that people who smoked a lot also seemed to get lung cancer more often. They couldn't say smoking caused cancer—that would require a true experiment—but the strong correlation was a red flag that led to more research and eventually, health warnings.

Correlational Design Pros

This design is great at proving that two (or more) things can be related. Correlational designs can help prove that more detailed research is needed on a topic. They can help us see patterns or possible causes for things that we otherwise might not have realized.

Correlational Design Cons

But here's where you need to be careful: correlational designs can be tricky. Just because two things are related doesn't mean one causes the other. That's like saying, "Every time I wear my lucky socks, my team wins." Well, it's a fun thought, but those socks aren't really controlling the game.

Correlational Design Uses

Despite this limitation, correlational designs are popular in psychology, economics, and epidemiology, to name a few fields. They're often the first step in exploring a possible relationship between variables. Once a strong correlation is found, researchers may decide to conduct more rigorous experimental studies to examine cause and effect.

So, if the True Experimental Design is the superstar quarterback and the Longitudinal Design is the wise elder, the Factorial Design is the strategist, and the Cross-Sectional Design is the speedster, then the Correlational Design is the clever scout, identifying interesting patterns but leaving the heavy lifting of proving cause and effect to the other types of designs.

8) Meta-Analysis

Last but not least, let's talk about Meta-Analysis, the librarian of experimental designs.

If other designs are all about creating new research, Meta-Analysis is about gathering up everyone else's research, sorting it, and figuring out what it all means when you put it together.

Imagine a jigsaw puzzle where each piece is a different study. Meta-Analysis is the process of fitting all those pieces together to see the big picture.

The concept of Meta-Analysis started to take shape in the late 20th century, when computers became powerful enough to handle massive amounts of data. It was like someone handed researchers a super-powered magnifying glass, letting them examine multiple studies at the same time to find common trends or results.

You might have heard of the Cochrane Reviews in healthcare . These are big collections of meta-analyses that help doctors and policymakers figure out what treatments work best based on all the research that's been done.

For example, if ten different studies show that a certain medicine helps lower blood pressure, a meta-analysis would pull all that information together to give a more accurate answer.

Meta-Analysis Pros

The beauty of Meta-Analysis is that it can provide really strong evidence. Instead of relying on one study, you're looking at the whole landscape of research on a topic.

Meta-Analysis Cons

However, it does have some downsides. For one, Meta-Analysis is only as good as the studies it includes. If those studies are flawed, the meta-analysis will be too. It's like baking a cake: if you use bad ingredients, it doesn't matter how good your recipe is—the cake won't turn out well.

Meta-Analysis Uses

Despite these challenges, meta-analyses are highly respected and widely used in many fields like medicine, psychology, and education. They help us make sense of a world that's bursting with information by showing us the big picture drawn from many smaller snapshots.